When we talk about integrating generative AI into products, the conversation often turns to conversational integration. In simpler terms, these are integration strategies that employ natural language to understand users and respond to them accordingly.

At its core, a user engages with a computer as if conversing with another person. But it’s not quite like talking to a human. A large language model (LLM) has its own set of limitations—the interaction can feel impersonal and bland. LLMs trained on extensive datasets tend to produce generic responses that don’t facilitate engaging conversations between applications and users.

What are personas and why should I care about them?

What truly separates human interaction from AI communication? Humans possess personality and persona — a representation or role they portray to the world, akin to donning a different hat for each occasion. A naturally reserved individual might still convey a warm and approachable persona in professional settings. An LLM, by contrast, doesn’t inherently have a personality or a persona — or does it?

Indeed, a large language model can exhibit personality traits influenced by its training data. We can somewhat influence this by modifying the model’s “temperature.” A high setting yields more creative outputs, and setting it to zero results in responses that are direct and precise — a bit “German,” as the saying goes. Between these extremes we can choose a value somewhere in the middle to find a good balance between creativity and preciseness. But beyond these tweaks and fine-tuning, the personality is largely fixed.

However, a persona isn’t a default feature of an LLM. The model doesn’t inherently understand the ‘hat’ it is wearing. It doesn’t recognize whether the person interacting with it is a customer or an employee, whether your company’s tone is formal or casual, or even what your company represents. For instance, a chatbot in a funeral home should not start by saying, "Hey! I'm glad you asked. I'm happy to help you with that!" — a tone that would be perfectly acceptable for a toy store’s website.

Personas are prompts

The missing element for the model is context, which we need to provide. Crafting the right prompts is an art form. If we instruct the model by saying, "You're an empathetic assistant at a funeral home." the bot will adopt this role in its responses. Through careful prompting, we give the AI context, instructing it to adopt a certain role to best represent our business. For our hypothetical toy store, the prompt might be, "Be friendly, always happy, and a bit casual, and occasionally include a smiley in your responses.".

Incorporating a detailed persona description within your system’s prompts can lead to more consistent, engaging, and predictable interactions with your LLM. It will be able to respond in a manner that aligns with your business’s values and more accurately portrays your brand identity.

These nuances can significantly enhance an LLM’s performance by providing it with that extra bit of context.

LLMs and multiple personas

Your use case may warrant having a variety of personas on hand. Isn’t it true that we communicate differently with colleagues than with customers?

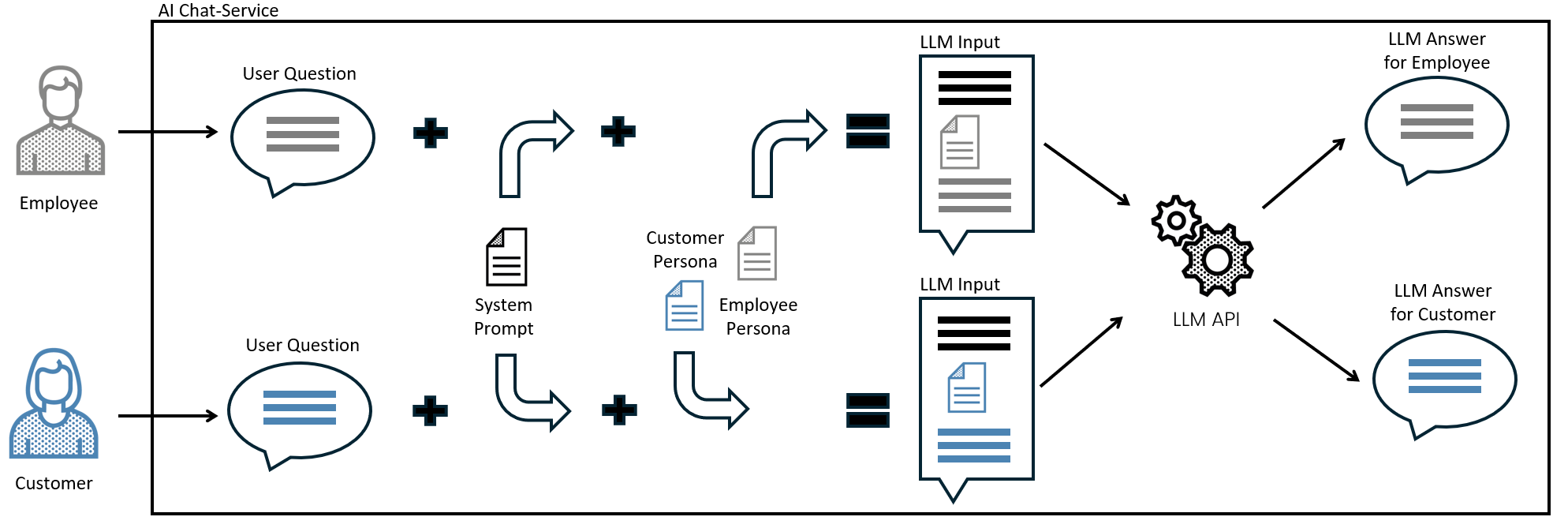

Similarly, you can use the same chatbot infrastructure for customer and employee interactions. Depending on who is currently engaging with the bot, you can append a different persona prompt. Customer interactions might come with instructions to maintain a professional and formal tone, whereas employee queries might prompt a more collegial and relaxed tone.

Personalization

Beyond generic context, we can endow the LLM with more personal information if available. This is commonplace during Retrieval-Augmented Generation (RAG): a placeholder in our prompt allows for the insertion of relevant documents, which the model uses to generate more informed responses.

Applying the same principle, we can use a persona prompt template with placeholders for personalization. Imagine a bot integrated into an internal support application that has access to employee data. Knowing the user allows for prompts like: "You are a helpful chatbot for an IT consultancy's support application. Respond like a good colleague. Maintain a friendly and professional tone but feel free to be a bit more casual at times. You're speaking to Sebastian, a 'developer consultant'.".

This personalization allows the bot to address users by name and understand their roles, tailoring its guidance — to more thoroughly explain billing to someone outside the accounting department, for instance, while offering more concise information to a billing specialist.

Conclusion

In conclusion, infusing AI with personas is more than a mere technical adjustment; it’s about enriching the user experience and ensuring that interactions echo the nuances of human communication. A carefully calibrated persona brings warmth and relevance to an otherwise cold engagement, transforming functional exchanges into memorable connections.

It’s a subtle art that, when mastered, gives users the sense that behind the code lies an understanding and responsive presence — a digital friend tailored to the moment, capable of empathy and poised to meet needs with precision. As technology continues to evolve, the integration of personas in AI will only heighten the sense of interactivity and personal touch that is fast becoming the benchmark in user experience.