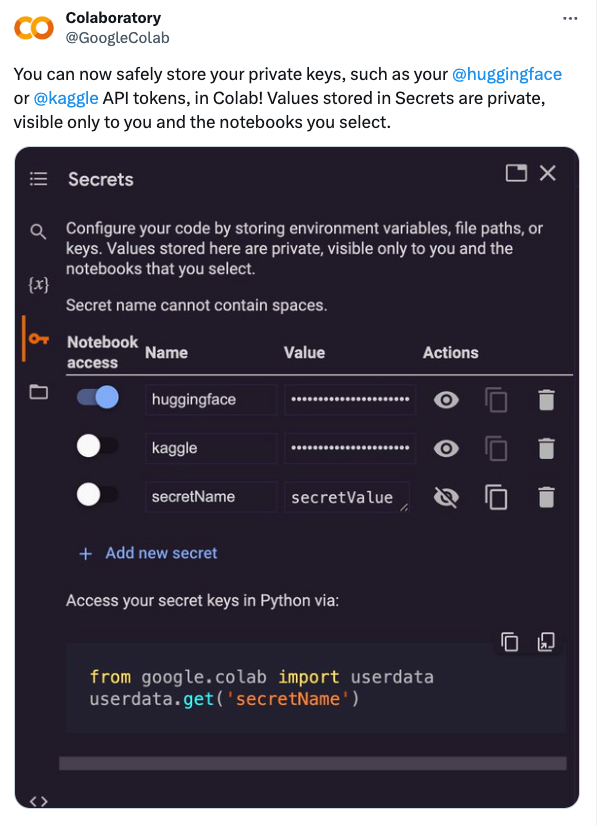

There’s some exciting news from the world of Google Colab that I can’t wait to share with you as an update to my article Securing Colab Notebooks – Protecting Your OpenAI API Keys. Remember the days when securing API keys and sensitive data in Colab notebooks seemed like a Herculean task? Well, those days are over, thanks to the newly introduced Colab Secrets feature. It was announced by the Colab team a few days ago on X.

Let’s dive into what this means for us and how it’s going to make our work in Colab notebooks easier.

Key Features of “Secrets” in Colab Notebooks

You might recall my previous article about using Google Cloud Platform’s (GCP) Secret Manager to protect our OpenAI API keys. However, Google Colab has now introduced a simpler method with their new Colab Secrets feature, which is generating a lot of excitement.

Let me explain the benefits of this new feature:

- Simplified code configuration: No more juggling with complex setups. Now, you can securely store all your environment variables, file paths, or keys in one place.

- Privacy guaranteed: Your secrets are only accessible to you and the notebooks you choose. Share your notebooks without any worry!

- User-friendly interface: Add, access, and manage your secrets with ease, directly within Google Colab.

- Enhanced security: As confirmed by the Google Colab team themselves, this feature is perfect for storing private keys for platforms like OpenAI, Huggingface or Kaggle, with the assurance of top-notch security.

Step-by-Step Guide to Using Colab Secrets for API Key Protection

Ready to make your coding life a lot easier? Here’s a step-by-step guide on how to use Colab Secrets:

- Open Google Colab and navigate to the new Secrets section.

- Add a new secret by entering its name and value. Remember, while you can change the value, the name is set in stone.

- The list of secrets is global and visible in all your notebooks. Decide on a notebook-by-note basis whether you want to give the notebook access to a secret by toggling the “Notebook access” switch on or off.

- To use a secret in your notebook, simply incorporate the following code snippet, replacing <name_of_your_secret> with the name of your secret:

from google.colab import userdata

my_secret_key = userdata.get('<name_of_your_secret>')

Code language: Python (python)- Keep in mind, if your secret is numeric, you’ll need to convert it from string to the appropriate numeric type.

- Secrets, once added, are accessible in all your notebooks. To use a secret in a different notebook, just grant access to that notebook.

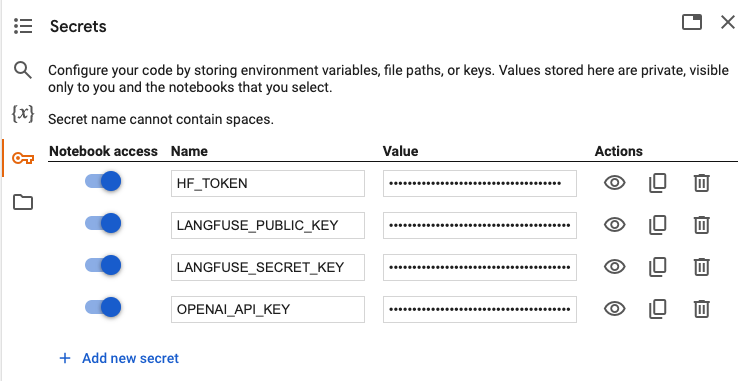

- Tip: Some Python modules expect API keys as environment variables. In this case, import the secrets like this:

# import Colab Secrets userdata module

from google.colab import userdata

# set OpenAI API key

import os

os.environ["OPENAI_API_KEY"] = userdata.get('OPENAI_API_KEY')

# set Langfuse API keys

os.environ["LANGFUSE_PUBLIC_KEY"] = userdata.get('LANGFUSE_PUBLIC_KEY')

os.environ["LANGFUSE_SECRET_KEY"] = userdata.get('LANGFUSE_SECRET_KEY')

Code language: C# (cs)- Tip: If you press the ‘Copy value’ icon in the action section, the value will become visible. So don’t do this when you’re screen sharing or screen recording your notebook!

Closing Thoughts: The Shift to Colab Secrets from GCP Secret Manager

So, there you have it! With the introduction of Colab Secrets, it’s time to say goodbye to the complexity of using Google Cloud Platform (GCP) Secret Manager for our notebooks. This new Colab Secrets feature ensures that our secrets are not only safe, but also incredibly easy to manage. Thanks to Colab’s secrets feature, our API keys in notebooks are more secure and user-friendly than ever. Happy coding and keep those secrets safe!