Support for OpenAI Function Calling was added to Azure OpenAI a couple of weeks ago and can be used for new deployments with the latest gpt-35-turbo and gpt-4 models. In this short article, I’ll guide you through building a simple app that leverages generative AI powered by Azure OpenAI and integrates with 3rd party API endpoints and local functionality using OpenAI Function Calling.

All source code shown in this article is available here on GitHub.

Necessary infrastructure deployment

You need an instance of the Azure OpenAI service deployed to your Azure subscription. If you want to create and maintain your infrastructure robustly and reliably, consider reading my article “Run your GPT-4 securely in Azure using Azure OpenAI Service” which explains how to deploy Azure OpenAI (with private network infrastructure) using HashiCorp Terraform.

Caution: Consider creating a fresh deployment if you have an existing gpt-35-turbo or gpt-4 deployment in your Azure OpenAI instance. I recognized existing deployments not supporting Function Calling and responding to valid HTTP requests with HTTP 500.

The Sample Scenario

For demonstrating purposes, we’ll build an application that can answer questions about characters and spaceships from the Star Wars saga. To do so, we define a proper system prompt to control how our assistant responds to incoming questions. (The Star Wars API provides structural data about everything related to Star Wars.) Finally, we instruct the LLM to construct answers as Markdown.

That being said, we’ll create four “functions” that the Large Language Model (LLM) could use to gather correct and reliable information.

- The

get_character_detailsfunction could be used by the LLM to retrieve detailed information about a particular character (e.g., Leia Organa) - The

get_spaceship_namefunction could be used by the LLM to retrieve the name of a particular spaceship using its identifier (e.g., TIE Advanced x1) - The

get_spaceship_namesfunction could be used by the LLM to retrieve the names of different spaceships, accepting a list of spaceship identifiers as a list. (e.g., TIE Advanced x1, N-1 starfighter - The

markdownifyfunction could be used by the LLM to style plain text using Markdown modifiers for bold and italic (e.g., G. returns**Leia Organa**)

Function Implementation

In this sample, we’ll use Python and LangChain to interact with our Azure OpenAI instance. LangChain offers multiple ways to implement a “function” (or how they call it a “tool”).

Although creating tools from existing Python functions seems like the most straightforward approach in the first place, I prefer implementing tools by subclassing from the BaseTool class of langchain.tools and specifying the tool interface using a Pydantic model class. This approach feels more readable and reliable.

That said, we’ll now take a look at two tools. First, we’ll revisit the get_spaceship_name tool. Second, we will see how the markdownify tool is implemented. This ensures you have a comprehensive overview of how to implement individual tools that LangChain could invoke if the LLM decides to.

The get_spaceship_name tool

We expect our tool to be called using a spaceship identifier. The Star Wars API uses integers as identifiers for all resources. The schema of our tool arguments is pretty easy:

from pydantic import BaseModel, Field

class GetSpaceshipNameModel(BaseModel):

id: int = Field(..., description="The id of a spaceship")

Code language: Python (python)With our schema in place, we can move on and define the get_spaceship_name tool.

from langchain.tools import BaseTool

from typing import Type

import requests

class GetSpaceshipNameTool(BaseTool):

name = "get_spaceship_name"

description = "A tool to retrieve the name of a single spaceship using its identifier"

args_schema: Type[BaseModel] = GetSpaceshipNameModel

def _run(self, id: int):

res = requests.get("https://swapi.dev/api/starships/" + str(id))

spaceship = res.json()

return spaceship["name"] + " (" + spaceship["model"] + ")"

Code language: Python (python)First, we provide essential metadata (name and description) to describe the purpose of our tool. Additionally, we set args_schema and point to the GetSpaceshipNameModel class we created before.

The actual implementation of the tool uses the requests package for calling into the Star Wars API. Finally, we construct the return value by concatenating the name and the model property retrieved from the API.

The markdownify tool

As mentioned before, we want our LLM to respond using Markdown. The LLM should format character names in bold and spaceship names in italics.

Although LLMs can create Markdown without using Open AI Function Calling, we provide a local implementation for demonstration purposes.

The argument schema for our markdownify tool defines three arguments that we expect: The plain text, if it should be formatted as bold text, and if it should be formatted as italic text. Providing bold and italic separately allows modifying LLM instructions and formatting text to be bold and italic.

from pydantic import BaseModel, Field

class MarkdownifyModel(BaseModel):

text: str = Field(..., description="Text to be formatted")

bold: bool = Field(False, description="Whether to bold the text")

italic: bool = Field(False, description="Whether to italicize the text")

Code language: Python (python)The tool implementation is not that sophisticated. We inspect the arguments and surround the text using the correct Markdown qualifiers:

from langchain.tools import BaseTool

from typing import Type

class MarkdownifyTool(BaseTool):

name = "markdownify"

description = "A tool to format text in markdown. It can make text bold, italic, bold-italic or format it as code."

args_schema: Type[MarkdownifyModel] = MarkdownifyModel

def _run(self, text: str, bold: bool = False, italic: bool = False, code: bool = False):

if not text:

return text

if bold:

text = "**" + text + "**"

if italic:

text = "*" + text + "*"

return text

Code language: Python (python)Register tools with individual LangChain Agent

We must tell the LLM that it could use our tools. In LangChain, we can use an Agent to do so. However, before we look at creating the actual agent, we create an instance of AzureChatOpenAI :

import os

from langchain.chat_models import AzureChatOpenAI

llm = AzureChatOpenAI(

openai_api_key= os.getenv("OPENAI_API_KEY"),

openai_api_base= os.getenv("OPENAI_API_BASE"),

openai_api_version= os.getenv("OPENAI_API_VERSION"),

openai_api_type= os.getenv("OPENAI_API_TYPE"),

deployment_name= os.getenv("AZURE_OPENAI_DEPLOYMENT_NAME"),

model_name= os.getenv("MODEL_NAME"),

)

Code language: Python (python)Next, we’ll create a simple list containing all individual tools:

tools = [

GetCharacterDetailsTool(),

GetSpaceshipNameTool(),

GetSpaceshipNamesTool(),

GetSpaceshipInfoTool(),

MarkdownifyTool()

]

Finally, we use the initialize_agent function provided by LangChain to construct our agent.

from langchain.agents import AgentType, initialize_agent

agent = initialize_agent(tools, llm, agent=AgentType.OPENAI_FUNCTIONS, verbose=True)

Code language: Python (python)Interacting with the LLM

To send queries to the LLM, we can call agent.run() and provide our prompt. As always, it makes sense to prefix the actual input of the user with instructions to control the behavior of our LLM (system prompt). As shown in the code below, we limit the LLM to answer only questions related to characters and spaceships from the Star Wars franchise. On top of that, we instruct the LLM to construct answers in Markdown and ensure every character name will be printed in bold and every spaceship name in italics.

# Grab users' input

question = input("What can I do for you?\n")

# Create the prompt

prompt = """

You're an assistant for fans of the Star Wars franchise.

Answer questions about characters and spaceships from the Star Wars universe.

Construct markdown and style character names in bold and spaceship names in italics.

Answer questions that are not related to characters or spaceships of Star Wars with "This question, I can not answer.".

Question: {question}

"""

Code language: Python (python)We enabled verbosity (verbose=True) when creating the’ agent’. This will force LangChain to print log messages to stdout when interacting with the LLM. For example, see this output generated by the script when asking, “What spaceships has Han flown?”:

What can I do for you?

What spaceships has Han flown?

> Entering new AgentExecutor chain...

Invoking: `get_character_info` with `{'name': 'Han'}`

{'id': 14, 'name': 'Han Solo', 'gender': 'male', 'height': '180', 'hair_color': 'brown', 'eye_color': 'brown', 'birth_year': '29BBY', 'weight': '80', 'spaceships': [10, 22]}

Invoking: `get_spaceship_names` with `{'ids': [10, 22]}`

['Millennium Falcon (YT-1300 light freighter)', 'Imperial shuttle (Lambda-class T-4a shuttle)']

Invoking: `markdownify` with `{'text': 'Han Solo', 'bold': True}`

**Han Solo**

Invoking: `markdownify` with `{'text': 'Millennium Falcon (YT-1300 light freighter)', 'italic': True}`

*Millennium Falcon (YT-1300 light freighter)*

Invoking: `markdownify` with `{'text': 'Imperial shuttle (Lambda-class T-4a shuttle)', 'italic': True}`

*Imperial shuttle (Lambda-class T-4a shuttle)***Han Solo** has flown the *Millennium Falcon (YT-1300 light freighter)* and the *Imperial shuttle (Lambda-class T-4a shuttle)*.

> Finished chain.

**Han Solo** has flown the *Millennium Falcon (YT-1300 light freighter)* and the *Imperial shuttle (Lambda-class T-4a shuttle)*.

Code language: SQL (Structured Query Language) (sql)The verbose output also indicates the “ping-pong” that our code plays with the LLM. Every time the LLM decides to use Open AI Function Calling, the following happens:

- We receive instructions on how to call the tool as part of the HTTP response

- LangChain takes those instructions and invokes the tool with arguments specified by the LLM

- The result of the tool is append to the previous request payload

- The new payload is sent to the LLM

This procedure is repeated until the LLM comes up with the final answer.

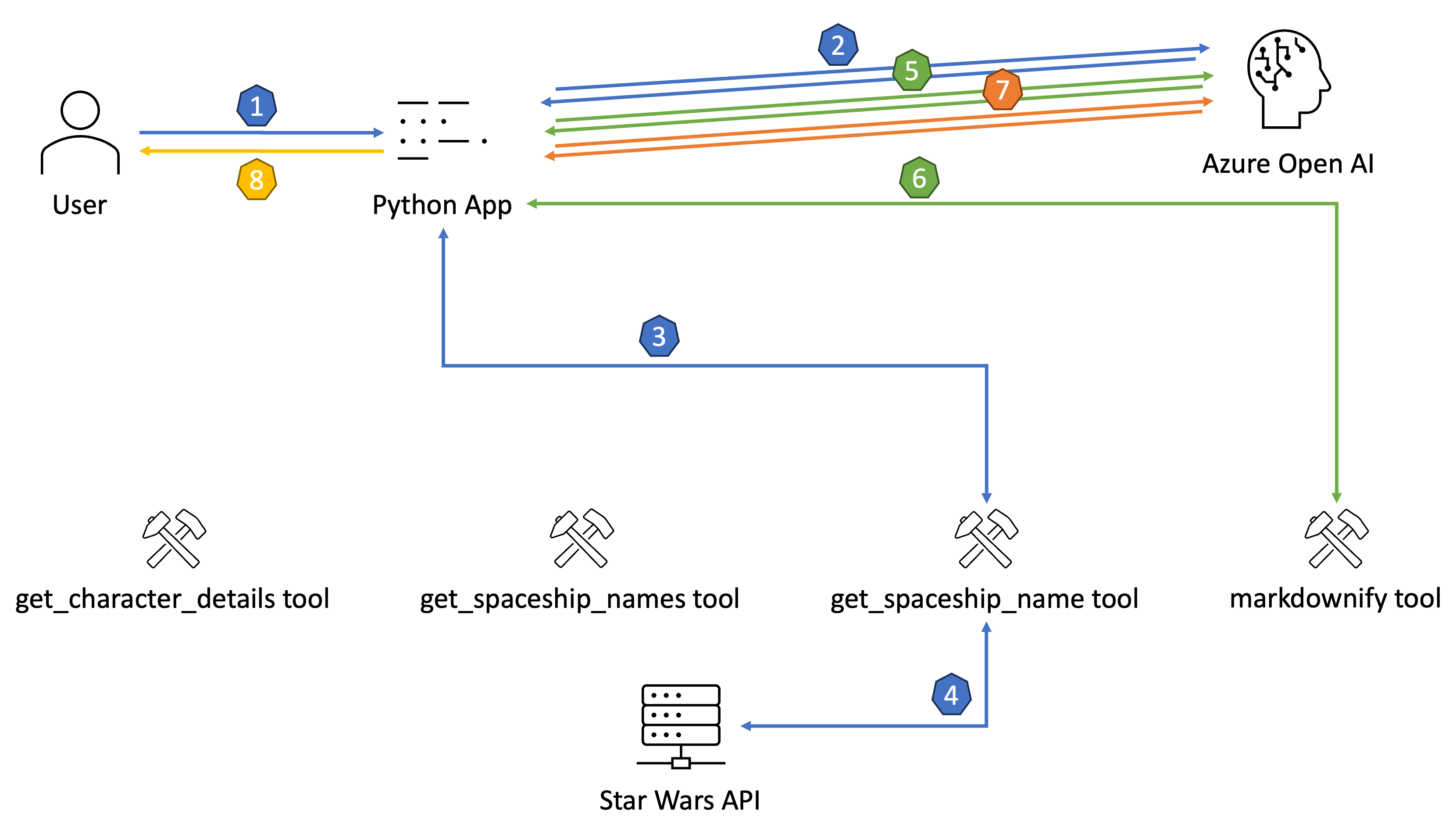

HTTP request flow when using OpenAI Function Calling

Although the sample illustrated here is quite simple, a bunch of interaction happens between the Python application and the LLM we deployed to Azure. Please look at the following illustration outlining which requests are made and data flows between our app, the LLM, and the user. (Tools are displayed separately for illustration purposes)

- The user asks a question

- Prompt is built and sent to the LLM in Azure Open AI

- The LLM processes the prompt and decides that calling the

get_spaceship_nametool is necessary - The LLM responds to the HTTP call and sends instructions for calling the

get_spaceship_nametool as part of the HTTP response body

- The LLM processes the prompt and decides that calling the

- LangChain interprets the HTTP response and invokes the

get_spaceship_nametool with arguments provided by the LLM - The

get_spaceship_nametool takes the spaceship identifier (received as an argument) and calls the Star Wars API to obtain the name of the desired spaceship - LangChain takes the spaceship name, adds it to the HTTP request payload, and sends everything to the LLM

- Again, LLM does some processing and decides that calling the

markdownifytool would be the best thing to do - The LLM responds to the HTTP call and sends instructions for calling the

markdownifytool as part of the HTTP response body

- Again, LLM does some processing and decides that calling the

- LangChain interprets the HTTP response and invokes the

markdownifytool with arguments provided by the LLM- The

markdownifytool adds proper Markdown formatting to the text

- The

- LangChain takes the Markdown, adds it to the HTTP request payload, and sends everything to the LLM

- Again, LLM does some processing and decides that it has found the final answer to the question asked by the user

- LangChain receives the final answer from the LLM and presents it to the user

Conclusion

Using OpenAI Function Calling with the latest gpt-35-turbo and gpt-4 deployments in Azure OpenAI is super handy and allows deep integration of LLMs with existing APIs.

When writing custom tools, we can leverage base classes provided by LangChain and lay out the argument schema using Pydantic. Following this pattern, we ensure our code is structured nicely and present precise instructions to the LLM for invoking those tools.

Although LangChain makes OpenAI Function Calling a simple task, it hides constructing HTTP request payloads, interpreting LLM responses, and invoking our custom tools. IMO, it is super important to understand and realize how requests flow through the architecture when using Open AI Function Calling and how actual request- and response-payloads look like.